Hello world ..

.. on hyper-exponential.com:

A selection of my personal thoughts on spirituality, tech, entrepreneurship, and other areas that I regularly meditate about: These are partly key reflections on past experiences and partly wild ideas how our future will look like.

What inspired me to do so?

Shortly after the release of ChatGPT a lot of conversation reached me regarding my thought on artificial intelligence (AI) or superintelligence, where things are going from now on and what the bigger picture is.

And to my personal astonishment, what I would share in these conversation, more often than not surprised people and gave them fresh, new insights. Things that I considered “obvious” were not that obvious to many at all.

Thus, I concluded that my trails of thoughts might be interesting not only to me but also to other people; even to people whom I’ve not met or maybe will never meet but still they might see value in reading what I can share and hence it’s worth sharing.

The decision to call it “hyper-exponential” is inspired for the overarching trend that I see in various technology fields today but especially profound in computing and AI: The overlapping and self-reinforcing impact of areas on each other that already grow on an exponential scale on their own.

Let us dive into these “hyper-exponential” dynamics, taking AI as an example:

The most notorious exponential trend in the area is the so-called “Moore’s law” which states that the number of transistors in a microchip doubles every two years. This means that compute power grows exponentially as well as access to computing power due to falling prices. While it might seem that we reached the limits of Moore’s law by the limitations of quantum physics and thermodynamics, it is still possible to circumvent these limitations e.g. by new architectures (e.g. arm64 vs. x86_64 that is more energy efficient) or by using GPUs instead of CPUs which allow to process data in parallel. It allows to increase the effective computation speed (i.e. reduce the time until a computation is finished) without actually increasing the switching frequency of each individual transistor involved in the computation.

And innovation doesn’t stop there. On the horizon, we can already see new paradigms of computing [1], e.g. with highly optimized ASICs for AI, analog computing etc. that will enable to push computation speeds further by several orders of magnitude over the next decade(s).

As consequence of Moore’s law and the global availability of cheap computing hardware two additional trends kicked-in:Today, nearly everybody can afford to have at least one computation device permanently at hand (a personal smart phone) but many of us actually own multiple. As a result, the amount of data that we produce per year and that is available digitally has been growing exponentially since the beginning of the millennium [2]. Currently it is growing with about 25 % per year.

Additionally, with more computation power becoming available, the productivity of people started to grow due to the improved tools that they can use. [3] While productivity growth of the overall industry varied between 1% to 4 % over the last couple of decades, for software engineering und especially AI, there have been a couple of “step-functions” that enabled rapid growth:

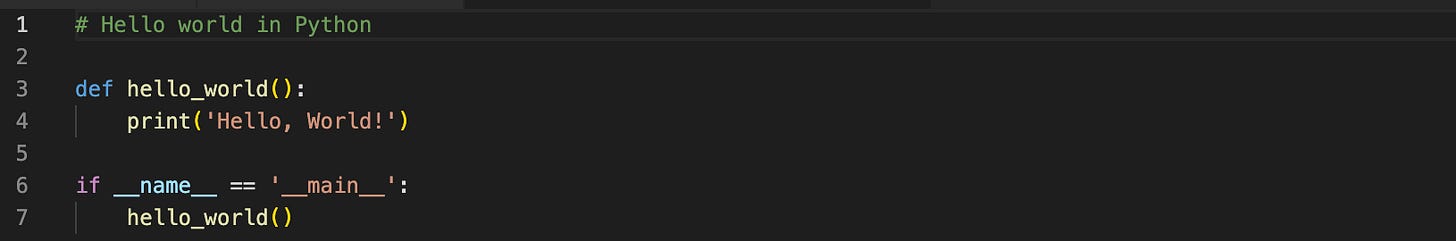

The transition from using low-level machine instructions to the first general-purpose languages like Fortran, C and Basic, and moving gradually towards high-level languages like Java and later python.

The growing amount of available open source code, open libraries, open tutorials and openly published research. In combination with a free and powerful search engine, everybody has access to tremendous knowledge wherever it might be hidden on the world wide web.

The things exemplified under point 3 not only increased the productivity of people in the field but also gradually reduced the entry barrier for new people to enter. Nowadays a laptop and internet access are enough to get started. Anything else, like educational material, the necessary computing resources in the cloud or the necessary data can be found there.

From the other side, due to the tremendous productivity gains offered by automation and AI, the demand in the labor market constitutes a constant pull of people into the field that attracts not only the brightest minds from computer science but also from adjacent fields like maths, physics, electrical engineering etc.

Consequently, the number of people working in the field of data analytics or AI is growing at about 5 % to 30 % per year depending on the country. [4]. By 2026, it is expected that over 100 million people will work in the field.

In my opinion, this might even be an underestimation: After the no-code paradigm allowed people to build professionally looking websites without any background in fullstack development, I would argue that the next big step change is around the corner: Large language models (LLMs) taking the role of a general purpose kind of compiler between natural language and executable program code. This means that anybody who can use a keyboard or later just her or his voice, will be able to “write software”, thus lowering the entry barrier as low as it can be. Think of it as “prompt engineering” coming the new “coding”; however, the necessity of “engineering” in “prompt engineering” might be eliminated quickly with the growing ability of LLMs to write purposeful software even from “non-elaborated prompts”.

And I guess it’s possible to see, how all trends mutually accelerate each other: More data allows to build better models, which gives higher productivity to people working in the field. Additionally, it lowers further the barrier for new people to enter. More people means more data but also better tools for people in the field of hardware development which is further fueled by the additional demand generated by more people working in the field. Better and cheaper hardware allows for more data generation, better models and higher availability in return. To some extent it’s a supercharged version of the developer productivity flywheel, expanded to a larger scale.

I would argue that in other field such as BioTech similar trends are mutually self-reinforcing each others acceleration (even though their underlying mechanisms and dynamics might be very different), leading to a similar hyper-exponential trend. An example of this can be seen in this talk: [5]

Even if it’s really rough of back of the envelop calculation, if we assume that each field grows at about 25 % a year, we can expect that whatever we have now, will be improved by 100x in 5 to 6 years from now on.

So, if you feel that the pace of development in AI is on “runaway speed”, I can confirm you gut-feeling: Your observation is correct, the field is moving forward on hyper-steroids – right here, right now, in front of our eyes.

Can we slow it down, stop it or even wind it back? I highly doubt so, at least not deliberately. And also I think, we shouldn't. More on this shortly ..

[1] https://www.future-of-computing.com

[4] https://www.technologyreview.com/2021/06/10/1026008/the-coming-productivity-boom/