Let the beast out of its cage!

Thank you OpenAI Board

Many of you might have seen the interview of Sam Altman with Lex Friedman [1] and had their own thoughts and conclusions about it. For those who have not, I would like to highlight one thing that I found particularly interesting.

At one point during the interview Sam is asked by Lex why they released ChatGPT and if he thinks that this was the right thing to do as the release sparked a lot of controversy: Many influential figures in AI, e.g. Geoffrey Hinton, are using their prominence to warn about the dangers of superintelligence. They insist on developing superintelligence and take care of superalignment within a “confined and safe environment” – which in particular would mean NOT plugging it directly into the Internet, our modern’s world main neural system. Additionally, now that the genie is out of the bottle, an arm’s race in AI dominance has been ignited between the most powerful corporations in the world. It is now taken out in the public with many more jumping on the train, being it start-ups, VCs or whole governments.

One may have very different opinions on Sam Altman and his initial motives to release ChatGPT. These motives might very well have been the pressure to demonstrate traction on technology to secure new funding from its investors – that would be no surprise to anybody who has been involved into building a venture-backed business. And what he says in the interview might very well be “post-factual” rationalization of the company’s decisions if not blunt PR to obscure the actual motives.

However, I would argue that his argument of “it’s necessary to expose people to what is going on, so that they can learn and adapt” is correct and important independently from whether it was the initial motivation to release ChatGPT.

Indeed, most people were not even slightly aware of what is happening behind the curtains at Google DeepMind, OpenAI, StableDiffusion etc. If the goal of OpenAI was to create buzz and attention, the goal was more than overachieved.

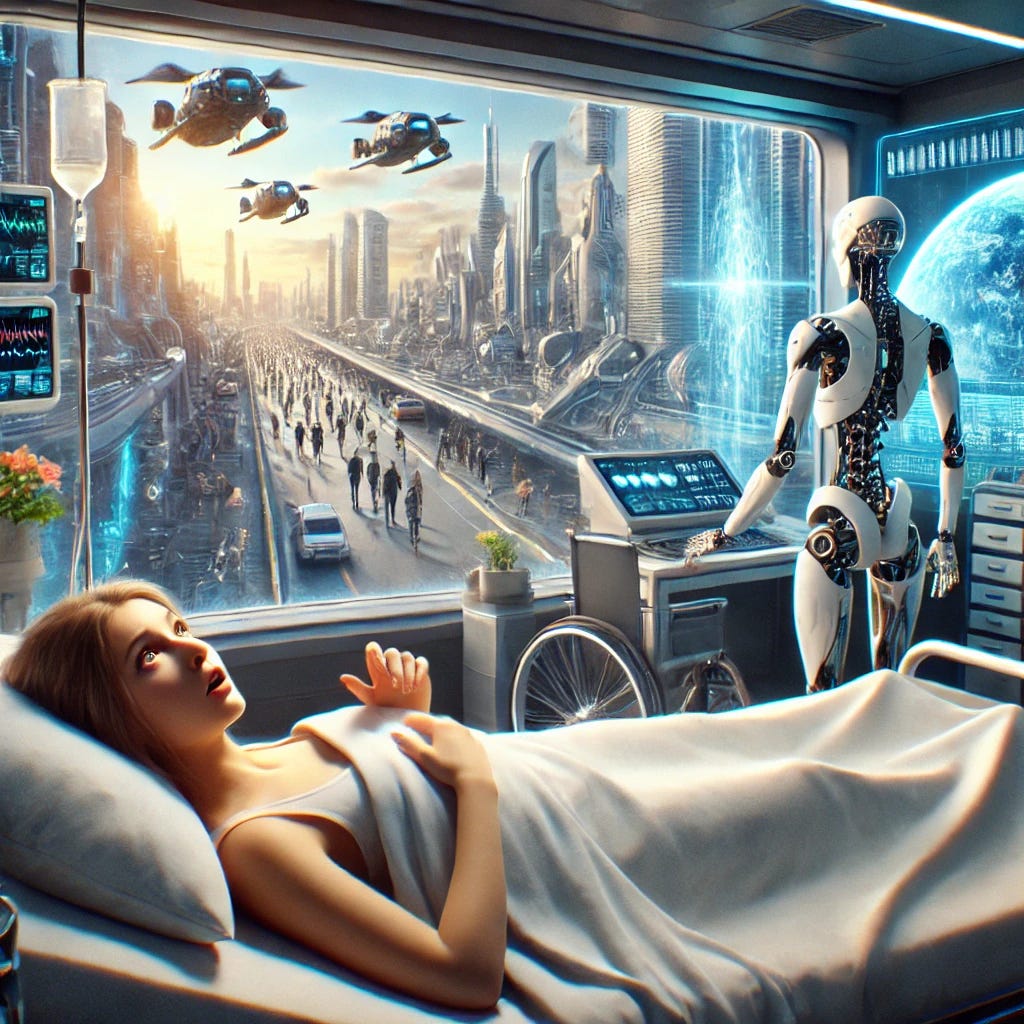

While the additional acceleration of development in the area of AI might pose dangers on its own, I would argue, that having people blindfolded about the rapid progress in the field would be even more harmful. It would be a bit like in the German movie “Good Bye Lenin” from 2003 where a person falls into coma in East Germany shortly before the German unification and wakes up to find herself in a new, very strange world to her.

The awareness created in the public, be it on the level of individuals, on the level of organizations or government, is crucial to create legislation, to demand a safe development of superintelligence as far as it is possible and to prepare ourselves of what is to come. It was an important wake-up call to remind us of the hyper-exponential technological progress that is taking place right under our noses while we as a society are/were not paying sufficient attention to it.

Thus, thank you OpenAI (on behalf on all the people who made this happen) for taking a bit of our attention away from social media and towards the central issue of our time: The hyper-exponential development of technology, especially superintelligence.

[1] Sam Altman: OpenAI CEO on GPT-4, ChatGPT, and the Future of AI | Lex Fridman Podcast #367