Object Recognition is Becoming ..

.. Absolute Commodity

Every time that OpenAI announces some major new features to its AI products, you might have observed a buzz over which start-ups are going out of business soon as their unique sales proposition (USP) was rendered “irrelevant”.

With this post, I would like to add a something to this list – and those are not only startups anymore.

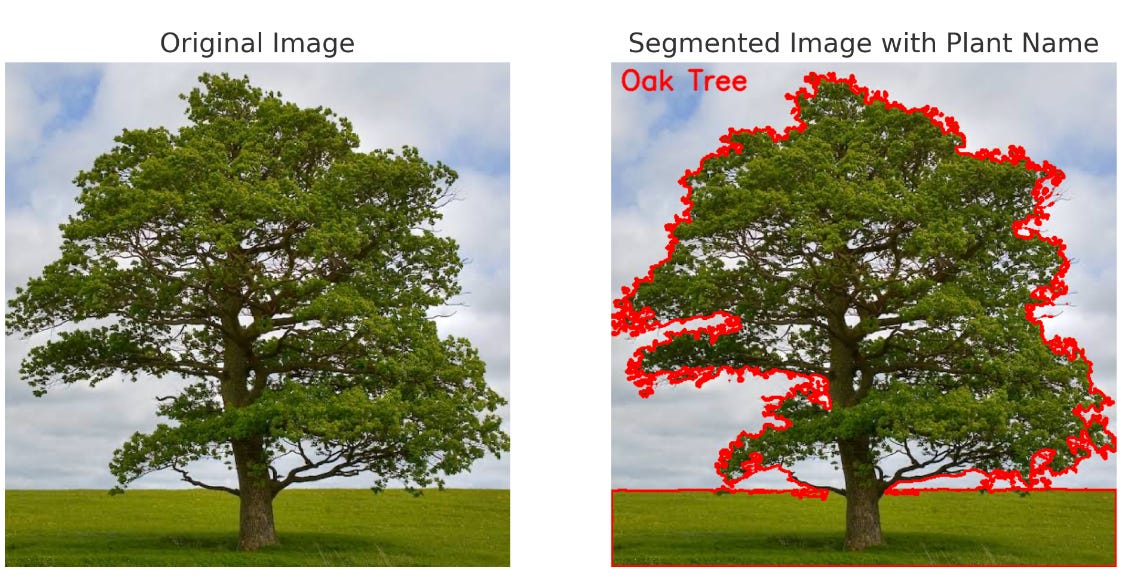

In order to quickly understand what I mean try to take a photo of the room that you are in right now. Then, pass it to the new ChatGPT4o model and ask it what it sees on the photo. You will get a pretty accurate description of the relevant objects in the room. Maybe even some objects that you have not identified yourself.

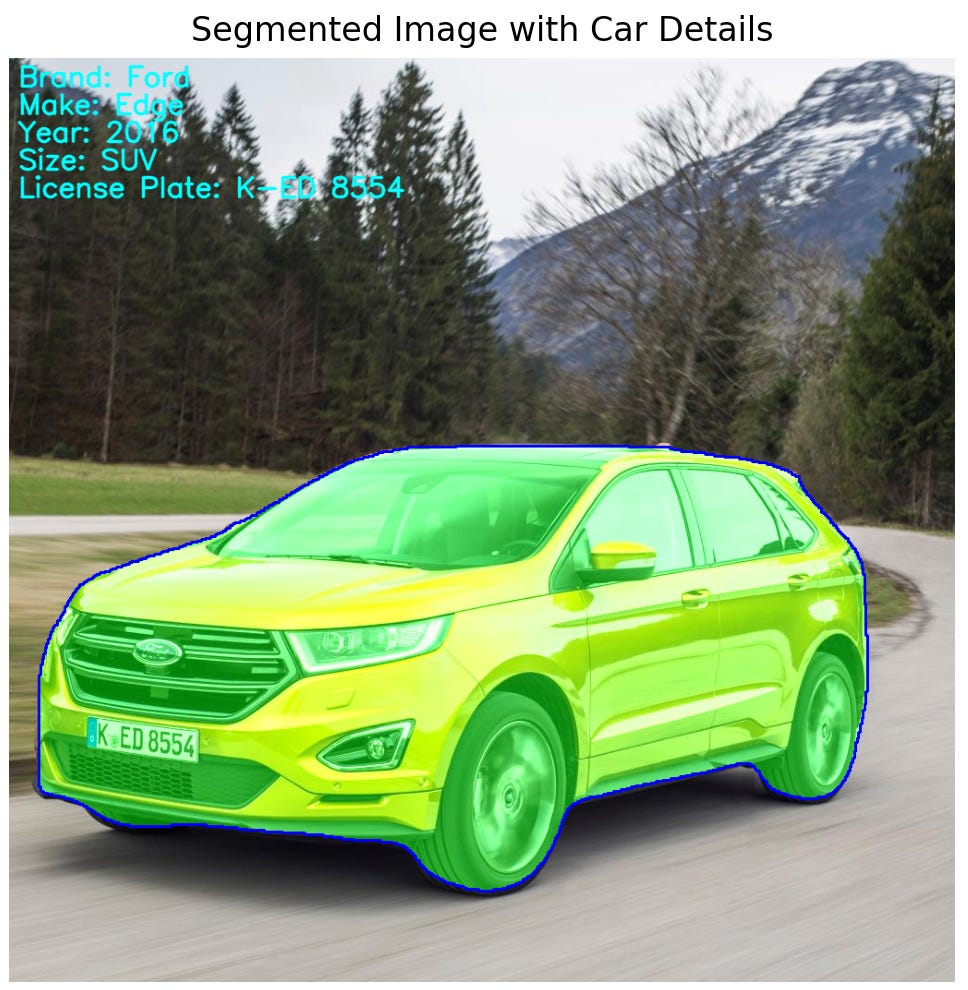

Try to take a photo of a car and ask about its color, make and model. You will get pretty accurate results.

What happened is that OpenAI – hungry for data to train its multi-modal models basically completed the task of “object recognition” as a “side-project”. You could also see it as an “emerging property” of the model.

As OpenAI trains on publicly available data, you can assume that anything that is publicly available in form of image content will gradually find its way into the weights of the large multi-modal foundation models.

This is great news for all users of object recognition. It means that many kinds of application that require object recognition can be built (or at least prototyped) much easier than ever before. Even if you have very specific image data that cannot be scrapped easily from the internet, multi-modal Retrieval-Augmented-Generation (RAG) is coming and can help you out. [1]

This might be bad news for companies whose USP lies in purposefully tuned object recognition models or e.g. OCR models such as ANPR (automated number plate recognition). An intuitive way to save their USP could be by reasoning that the “purpose specific models” are much better suited to run on-edge and using a foundation model is an overkill for a very specific task. However, I think that this is only a temporal relief for two reasons:

Firstly, there is a tremendous push towards improving the energy efficiency of foundational models and bringing them to edge by optimizing the model architecture or a designing specific ASICs to run them. Secondly, the economy of scale will kick in like in the case of smartphones: Due to the high scale of their production and usage, each smartphone is so cheap, that using 5 % of a smartphone’s capabilities while paying 100 % its price is still cheaper than buying the application specific hardware. [2]

If you feel a bit anxious if foundational models are coming for your AI business next, I want to leave you a little side note trying to give some emotional relief in the form of what in German is termed “Schadenfreude”: Ironically, when OpenAI (and/or its major companions) will fulfill their mission of creating AGI, I believe that it will put themselves out of business (for good!), too; as this will mark the beginning of a new type of “post AGI”-economy.

[1] If your business model in comparison to your competitors, rather relies on the customer access or the user experience rather than the underlying AI, you could even have the “perverted incentive” to throw as much data at e.g. OpenAI as possible so that it ends up in the in the original weights of the foundation models and thus undermines further your competitors USP.

[2] Segmentation and bounding boxes were starting to be become commodities even earlier by models such as YOLO or segment everything by Meta.